When I first started gathering feedback from customers and employees, I thought a simple “How are we doing?” would do the trick. I was wrong.

I was met with vague responses and data that led me nowhere. After sharing over hundreds of surveys with thousands of survey questions, this is what I’ve realized—Good surveys don’t just ask questions—they ask the “right” questions.

In this guide, you’ll find:

- The exact questions top-performing teams—product, CX, HR, enablement—use when they need unfiltered input quickly.

- The context: why these questions work (and what to avoid).

- The timing: when to ask them for maximum clarity.

- The action plan: how to use the data without drowning in dashboards.

We cover six proven use cases: customer experience, employee feedback, market research, training, education, and events. You’ll also find tool tips, best practices, and templates to shortcut your way to results.

If you’re in the middle of solving something—or about to make a decision that matters—this guide’s for you.

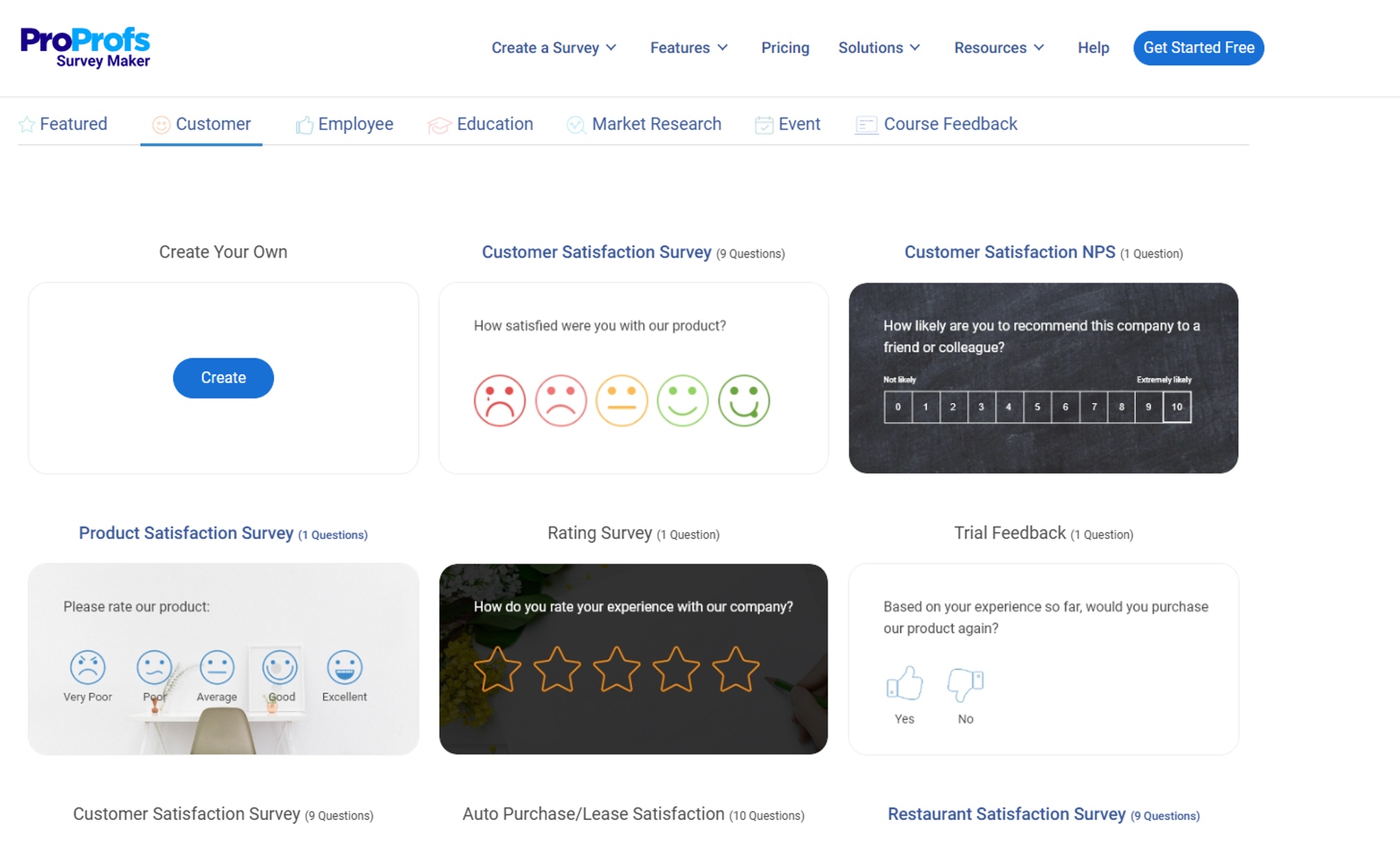

Section 1: Customer Survey Question Examples

Goal: Get a clean read on what’s actually working for your customers—and what’s quietly driving churn, confusion, or disengagement.

1. Onboarding Feedback

When to ask: ~7–14 days after signup or initial activation

You’re not just asking about ease. You’re probing for confidence, early wins, and friction that can snowball later. Use these questions to shape onboarding into a fast path to value.

- How easy was it to get started with [Product]?

Establish baseline usability from the user’s first real touchpoint. - What was the biggest blocker (if any) during setup?

Spot the roadblocks that delay activation or kill momentum. - Did you feel confident using the product after your first week? (Yes/No)

Confidence is a leading indicator of retention—don’t skip it. - How long did it take before you felt the product was delivering value?

Time-to-value is gold. This tells you how long is too long. - What part of the onboarding process felt unclear, clunky, or frustrating?

Uncover silent drop-off points hiding behind weak activation data. - What’s one thing we could have done differently to improve your first week?

Ask for the fix directly—users will usually tell you. - On a scale of 1–5, how satisfied were you with the onboarding experience?

Quantify sentiment to track improvement over time. - If we had to remove one part of onboarding to make it faster, what would it be?

Find and kill the bloat. Simplification unlocks scale.

2. Feature Use & Product Feedback

When to ask: After a feature launch or to evaluate the usage of existing features

Use this set to uncover whether your features are driving real value or just bloating the roadmap.

- Have you used [Feature X] in the past week? (Yes/No)

Start by separating users from non-users, so your data stays clean. - How useful is [Feature X] for your current workflow? (Scale: 1–5)

Measure whether it solves real pain or just looks good in demos. - What specific task or goal do you primarily use [Feature X] for?

Pin down the job-to-be-done that’s anchoring usage. - What’s one thing you wish [Feature X] handled better or faster?

Surface friction that’s killing momentum or satisfaction. - Was [Feature X] easy to discover in the interface? (Yes/No)

Validate whether visibility, not value, is limiting adoption. - What prompted you to try [Feature X] for the first time?

Understand the trigger moment that turned intent into action. - How likely are you to keep using this feature going forward?

Gauge stickiness so you can forecast usage and retention. - If [Feature X] were removed, how would that impact your work?

A blunt-force test for whether this feature actually matters.

3. Customer Satisfaction (CSAT)

When to use: After key interactions or at regular touchpoints

Use CSAT to track how well you’re meeting expectations—not just overall, but at every critical moment in the journey.

- How satisfied were you with your experience today? (1–5)

Quick pulse check to detect friction before it escalates. - Did we meet your expectations? (Yes/No)

Expectations are the baseline—falling short here flags a deeper issue. - What could we have done to improve your experience?

Turn dissatisfaction into direct action items. - What part of the product or service impressed you most?

Double down on what’s exceeding expectations. - What part of the product or service fell short?

Spot weak links in delivery before they affect retention. - Compared to other tools you use, how does [Product] stack up?

Reveal competitive positioning from the user’s lens. - If you had to rate your overall satisfaction, what would it be? (1–10)

Roll the experience into a single trust-building metric.

4. Net Promoter Score (NPS)

When to use: Quarterly or biannually

NPS helps you gauge brand advocacy and predict organic growth when used with context, not just a score.

- On a scale of 0–10, how likely are you to recommend [Product]?

Your growth signal, especially when tied to user type or behavior. - What’s the primary reason for your score?

The real value lies in this follow-up, not the number. - What’s one thing we could do to improve that score?

Turn detractors into feedback loops. - What would make you actively promote [Product]?

Unlock the gap between passive use and evangelism. - Have you ever recommended us before? Why or why not?

Reveal untapped promoters—or missed moments to spark one.

5. Post-Support Feedback

When to use: Immediately after a support interaction ends

Support is a product experience. These questions ensure it’s helping, not harming, trust.

- Was your issue resolved to your satisfaction? (Yes/No)

The ultimate support outcome—resolution or reroute. - How long did it take to get a response?

Speed still matters, especially in moments of friction. - Was the support rep knowledgeable and helpful?

People drive perception—track consistency here. - How would you rate the overall support experience? (1–5)

Get a trendable, quantifiable benchmark. - Did you find the answer on your own before contacting us? (Yes/No)

Assess the strength of your self-serve resources. - What could we have done to make your support experience smoother?

Uncover small changes that can create outsized trust.

6. Churn & Exit Surveys

When to use: Immediately after cancellation or downgrade

You can’t stop every churn, but you can learn from every exit. These questions clarify root causes and recovery paths.

- What’s the primary reason you decided to cancel?

Start with what broke—feature, value, price, or timing. - Was there a specific feature or limitation that made you leave?

Highlight build gaps that are actually costing you. - Were you missing any critical functionality?

Identify product-market fit blind spots. - Was pricing a factor in your decision?

Use this to evaluate perceived vs. actual value. - Are you switching to another tool? (If yes, which one?)

A direct line to your competitive threats. - What could we have done to keep you?

Sometimes all it takes is the right nudge—know what it is. - Would you consider coming back in the future?

Segment win-backs from permanent losses.

7. Ongoing Product Feedback Loops

When to use: Always-on in-app prompts or quarterly campaigns

Keep your feedback loop alive—even when customers aren’t in crisis mode. This is where roadmap clarity is born.

- What’s your favorite thing about using [Product]?

Use this to anchor messaging and double down on strengths. - What’s your least favorite thing?

Even fans have friction. Fix it before it erodes loyalty. - What’s one feature you wish we had?

Source ideas directly from active demand. - What’s the last task you tried to complete but couldn’t?

Uncover high-intent failures your team might not see in metrics. - What do you still rely on another tool for?

Expose opportunities to expand your product’s role. - How often do you use [Product] in your workflow?

Frequency is a leading indicator of retention. - What’s the one thing you’d improve about the product today?

Your quick-win list—straight from the people who use it most.

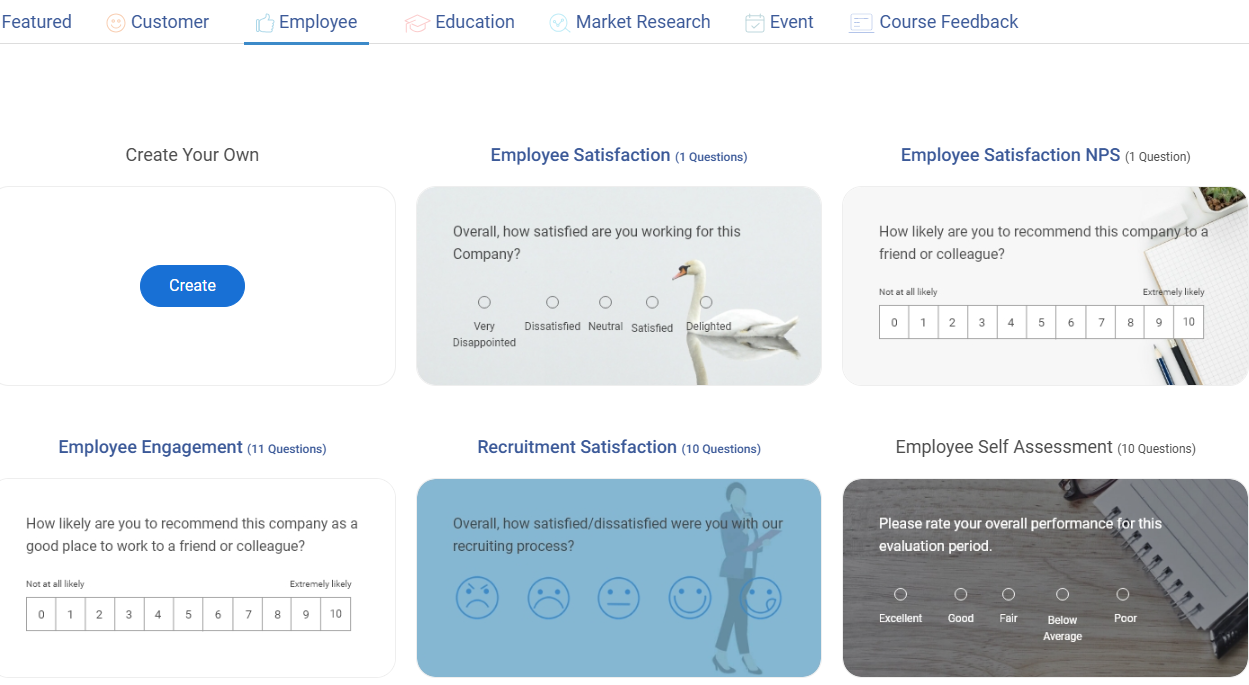

Section 2: Employee Survey Question Examples

Goal: Get honest, usable insights from your team to improve engagement, communication, and culture—without relying on vague check-ins or HR guesswork.

1. Employee Engagement & Pulse Surveys

When to use: Monthly or quarterly check-ins

Use this set to track sentiment, motivation, and retention risk in real time—not just once a year.

- How satisfied are you with your current role? (1–5)

A direct proxy for emotional investment in the work. - Do you feel valued for your contributions at work?

Recognition is retention fuel—don’t assume it’s happening. - On a scale of 1–10, how likely are you to recommend this company as a workplace?

A quick read on employer brand from the inside. - Do you feel your work makes a meaningful impact?

Connect the mission to the day-to-day. - Is your workload manageable?

Uncover burnout before it burns through productivity. - Are you confident in the direction the company is heading?

Alignment on vision is a leadership KPI—track it. - What’s one thing that would improve your workday?

Invite practical fixes from the people living with the problems. - Do you feel connected to your team?

Belonging and collaboration go hand in hand. - How likely are you to stay at the company over the next year?

A leading indicator of churn before it hits attrition reports.

2. Manager & Team Communication Feedback

When to use: After performance reviews or team changes

Great managers create a great culture. These questions expose communication gaps that metrics can’t.

- How clear are your team’s goals and priorities?

Confusion here leads to wasted execution. - Do you receive regular and helpful feedback from your manager?

No feedback usually means no growth, or worse, disengagement. - Do you feel comfortable bringing up concerns with leadership?

Psychological safety is the bedrock of high-trust teams. - How often do you have meaningful 1:1s with your manager?

Regular touchpoints drive clarity, not just performance. - What’s one thing your manager could do more of?

Encourage honest coaching opportunities. - Do you feel your ideas are heard and considered?

Engagement drops fast when people feel ignored. - How effective is communication within your team?

Gaps here ripple into productivity, trust, and morale.

3. DEI & Belonging Surveys

When to use: Quarterly or after DEI initiatives

Measure more than optics. These questions get to the human experience behind inclusion metrics.

- Do you feel a sense of belonging at work?

Belonging drives performance—this question makes it visible. - Are diverse perspectives welcomed on your team?

Diversity means nothing without inclusion. - Do you feel safe being yourself at work?

Psychological safety isn’t optional—it’s foundational. - Have you experienced or witnessed bias at work? (Yes/No – optional follow-up)

A simple yes can open up conversations that leadership needs to hear. - What more could the company do to support inclusivity?

Ground your DEI efforts in employee reality, not assumptions. - On a scale of 1–5, how inclusive is our work culture?

Track how culture is perceived—not just how it’s intended.

4. Employee Onboarding Feedback

When to use: 2–4 weeks after the new hire starts

Strong starts shape long-term success. These questions help you fix first impressions fast.

- How helpful was your onboarding experience? (1–5)

Set the tone for how someone feels supported early on. - Did you have the resources you needed to get started?

Identify gaps in docs, tools, and tribal knowledge. - Was your role clearly defined from day one?

Ambiguity here leads to misalignment later. - How supported did you feel in your first two weeks?

This tells you whether your team is delivering, not just documenting, onboarding. - What was the most confusing part of onboarding?

Find and fix the head-scratchers before they scale. - What would you change about the onboarding process?

Fresh eyes catch blind spots faster than tenured teams.

5. Learning & Training Evaluation

When to use: Immediately after internal workshops, onboarding sessions, or courses

Don’t just measure attendance—measure effectiveness. These questions reveal ROI on training time.

- Did the training help you perform your job better? (Yes/No)

Cut or improve anything that doesn’t drive impact. - How relevant was the content to your day-to-day work?

Generic content kills engagement—this helps fix that. - Were the materials clear and easy to follow?

Clarity affects both confidence and knowledge transfer. - What’s one thing you learned that you’re already applying?

Reinforces takeaways while surfacing the most useful content. - What’s one thing the training didn’t cover that it should have?

Fill the gaps next time with what learners actually need. - Would you recommend this session to a colleague?

Your internal NPS is direct from the learner.

6. Exit Interviews

When to use: The Final week of employment

Every exit is a signal. These questions turn goodbye into feedback gold.

- What’s the main reason you decided to leave?

Find the root cause, not just the symptom. - Was there a specific moment that triggered your decision?

Sometimes it’s one thing, not a slow decline. - Did you feel supported in your role?

Lack of support is one of the quietest drivers of churn. - What would have convinced you to stay?

Test your re-engagement levers—even after departure. - Were you given opportunities to grow?

Career stagnation is a preventable loss if caught early. - Would you recommend this company to others?

If not, it’s time to dig deeper. - Is there anything we should know that wasn’t discussed in 1:1s?

Some truths only surface when there’s nothing left to lose.

7. Remote Work & Hybrid Culture Feedback

When to use: After a policy shift or as a regular check-in for distributed teams

Great remote culture doesn’t happen by default. These questions help you build it intentionally.

- Do you feel productive working remotely?

Productivity is perception—track how it’s evolving. - How often do you feel isolated or disconnected from the team?

Loneliness can kill morale even when performance looks fine. - Are virtual meetings well-run and purposeful?

Meetings are culture. Bad ones rot it. - Do you have the tools you need to collaborate effectively?

Stack issues are a silent killer of remote performance. - Would you prefer more or fewer in-person interactions?

Get informed about off-site planning and hybrid rhythms. - What’s the biggest challenge of remote/hybrid work for you?

Spot trends before they calcify into turnover.

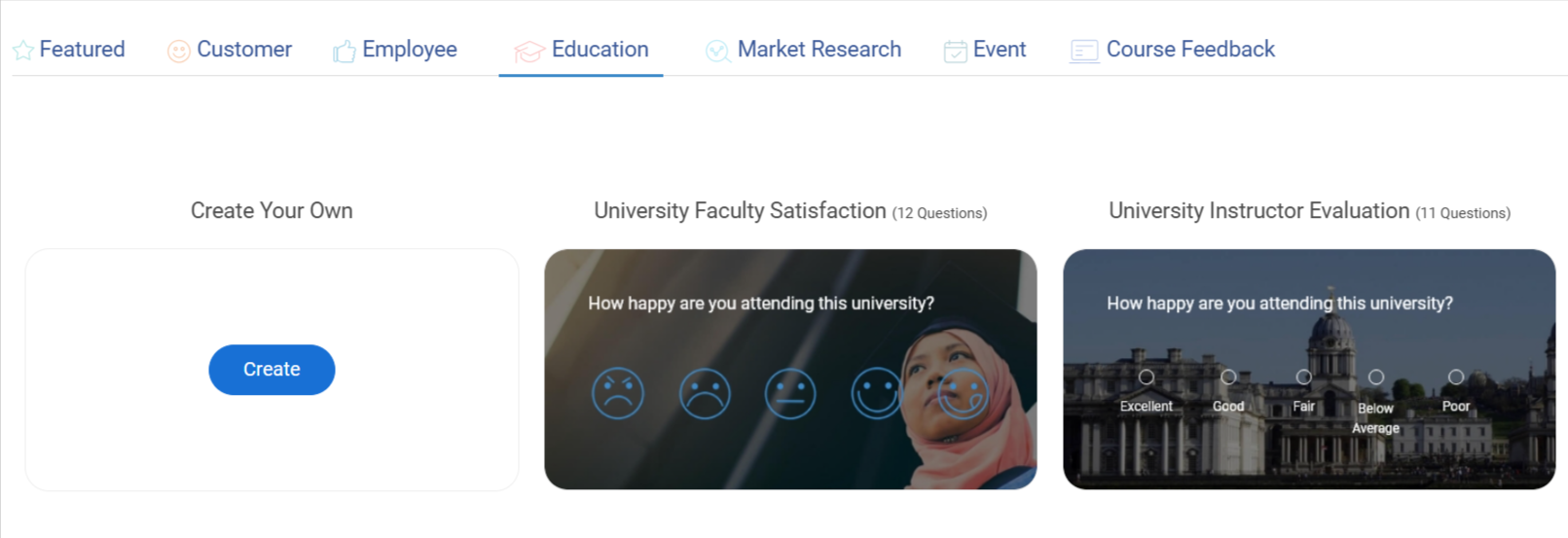

Section 3: Education Survey Question Examples

Goal: Measure learning effectiveness, student experience, and content relevance—whether you’re running internal training, academic instruction, or online courses.

1. Learning Outcomes & Skill Retention

When to use: End of course/module, or weeks after completion

Use student survey questions to assess whether learning has translated into usable skills—and where gaps still exist.

- Do you feel more confident applying the skills covered in this course?

Confidence is a proxy for applied learning. - How relevant was the material to your current role or studies?

Irrelevant content kills engagement—ask early, fix fast. - What specific concepts do you still feel unclear about?

Spot where the learning experience breaks down. - Have you used what you learned in a real-world scenario yet?

Get to know if theory is turning into action. - How much of the content do you feel you retained? (Scale: 1–5)

Measure memory, not just satisfaction. - Would a follow-up session help reinforce the material?

Get a signal if reinforcement or review is needed.

2. Pre/Post-Training Survey Questions

When to use: Before and after a structured training program

These questions track progress and surface blind spots in curriculum design.

- How familiar were you with this topic before the training? (1–5)

Baseline understanding helps measure actual learning gain. - How much did your understanding improve after the training? (1–5)

Self-assessed progress gives directional insight. - Which topics were most challenging for you?

Use this to prioritize future content revisions. - What topic do you wish had been covered more deeply?

Learn where depth beats breadth in delivery. - Rate your confidence in using this knowledge on the job.

Confidence precedes usage—track both.

3. Instructor & Facilitator Feedback

When to use: Immediately after sessions or modules

Evaluate not just what was taught, but how it was delivered.

- How effective was the instructor at explaining concepts? (1–5)

Strong delivery is as important as strong content. - Was the pace of the session too fast, too slow, or just right?

Mismatched pacing often tanks retention. - Did the instructor create an engaging learning environment?

Engagement is a key predictor of retention. - What did the instructor do particularly well?

Highlight best practices you can replicate. - What could the instructor improve?

Constructive input helps raise the floor.

4. Content & Delivery Format Feedback

When to use: Post-course or post-module for digital or in-person formats

Not all content works in all formats. These questions tell you what to do.

- How clear and well-organized were the course materials?

Disorganized content breaks the learning flow. - Was the format (video, text, live) effective for your learning style?

Use this to tailor delivery to actual preferences. - Were the exercises or quizzes helpful?

Hands-on tasks reveal whether theory sticks. - What would you add, remove, or change about the content?

Let learners design your next iteration. - How interactive did the course feel?

Interaction boosts retention and engagement—measure it.

5. Learner Experience & Motivation

When to use: During or after longer courses or programs

Understand what keeps learners engaged—and what pulls them away.

- What motivated you to take this course?

Know the ‘why’ behind enrollment—it drives expectations. - Did the course meet your expectations?

This is your fit check—did the promise match the payoff? - What part of the course kept you most engaged?

Double down on what’s working best. - What part of the course caused you to lose interest?

Identify boredom or overload zones. - Would you recommend this course to a peer?

Your organic growth lever—if the answer’s yes.

6. Assessment & Feedback Process

When to use: After graded activities or feedback rounds

Learners don’t just need grades—they need direction. These questions help improve how feedback is delivered.

- Was the grading criteria clearly explained?

Ambiguity leads to distrust and disengagement. - Did you receive timely feedback on your assignments?

Timeliness affects motivation and course pacing. - Was the feedback actionable and specific?

Generic comments don’t help anyone improve. - Did the assessments reflect the learning objectives?

Ensure evaluation matches what was taught, not just what was tested.

7. Course Completion Barriers

When to use: When learners drop out, or at the course midpoint

Most drop-offs are fixable. These questions tell you how.

- What prevented you from completing the course on time?

Identify the blockers that stall progress. - Was the course too time-consuming or overwhelming?

Use this to adjust workload and pacing. - Did you face any technical issues?

Platform issues kill trust—fast. - What could we do to make the course easier to complete?

The most honest answers often come from halfway through.

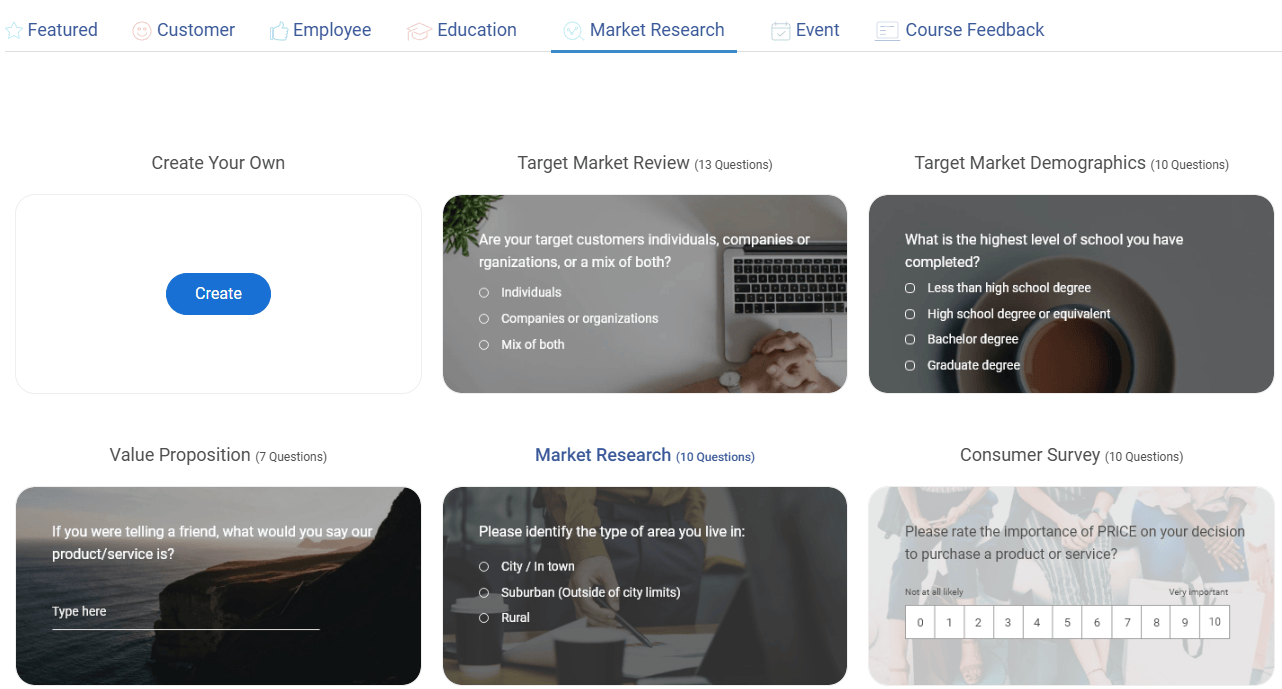

Section 4: Market Research Survey Question Examples

Goal: Understand your target audience, identify market gaps, test positioning, and gather competitive insight to shape smarter go-to-market strategies.

1. Target Audience Discovery

When to use: Early-stage research, persona validation, or entering a new segment

These questions help you define your real buyer, not the one you imagined in a deck.

- What is your current job role?

Pinpoint function and buying authority. - Which industry do you work in?

Essential for segmentation and GTM targeting. - What’s your team size or company size?

Scale your messaging—startups vs. enterprise think differently. - What are your top three work-related goals right now?

Reveal the motivators your product can align with. - What’s your biggest challenge when it comes to [problem you solve]?

This is where urgency lives—prioritize accordingly. - How do you currently solve [that problem]?

Uncover DIY workflows and existing alternatives. - Have you used a tool like [your product] before?

Learn how much education you’ll need in the funnel.

2. Competitor & Tool Usage

When to use: During positioning work or to inform product comparisons

These questions tell you who you’re really competing with—and how to beat them.

- What tools or platforms do you use for [specific task]?

Build your competitive map from actual usage, not assumptions. - How satisfied are you with your current tool for [task]?

Low satisfaction = switching opportunity. - What do you like about your current solution?

Preserve key features if you’re replacing a tool. - What frustrates you about your current solution?

Pain points are your entry wedge. - Why did you choose your current vendor?

Reveal priorities—price, speed, features, brand. - Are you considering switching in the next 6 months?

Identify high-intent leads for sales or beta tests.

3. Feature/Problem Validation

When to use: Before building a new product or feature

Test if the thing you’re about to build solves a problem people actually care about.

- How important is [feature or capability] to you? (1–5)

Score feature priority before you scope it. - How often do you encounter [specific problem] in your workflow?

Frequency indicates urgency and value. - What do you currently do when [problem scenario] happens?

Uncovers broken workarounds and latent needs. - Would a solution that [value proposition] be useful to you? (Yes/No)

Quick validation of messaging and utility. - What’s missing from the tools you’ve tried in this category?

Your blueprint for differentiation.

4. Brand Awareness & Positioning Testing

When to use: Before a campaign, rebrand, or launch

Your perception lives in the customer’s mind—these questions show you what’s actually there.

- Have you heard of [brand/product] before? (Yes/No)

Measure awareness baseline before pushing spend. - Where did you first come across [brand/product]?

Identify the most effective touchpoints or channels. - What comes to mind when you hear our name?

Unfiltered associations = true brand equity. - Which of these headlines/messages resonates most with you?

Write a copy that lands, not just reads well. - Which of these benefits matters most to you? (Ranked)

Prioritize what customers actually care about. - If you had to describe our product in one sentence, what would you say?

This shows you how your market is framing you.

5. Pricing & Willingness to Pay

When to use: Before launching a paid product, feature, or pricing tier

Test how your value translates to dollars—before your revenue does.

- How much would you expect to pay for a tool that does [X]?

Uncover perceived value, not just competitor benchmarks. - What would make you feel that [price] is too expensive?

Surface the fear factors behind price resistance. - What price would feel like a great deal for you?

Establish a psychological floor for price anchoring. - Would you prefer monthly or annual billing?

Optimize cash flow and pricing cadence. - Which of these pricing models makes the most sense for you? (Tiered, usage-based, flat fee)

Match your model to your buyer’s mental model.

6. Product-Market Fit Signals

When to use: After launch, in the early traction stage

These are the gold-standard indicators of whether you’ve found product-market fit or still need iteration.

- How disappointed would you be if you could no longer use our product? (Very/Somewhat/Not at all)

For the definitive PMF signal, use the 40% benchmark. - What type of person would most benefit from this product?

Refine your ICP straight from users. - What would you likely use this product for most?

Confirm or redirect your primary use case hypothesis. - What’s the biggest benefit you’ve experienced so far?

Get clarity on what your product really delivers, not just what it claims to.

7. Ad Concept or Messaging Testing

When to use: Before launching paid ads, landing pages, or social campaigns

Don’t guess what will convert. Let your audience tell you what resonates.

- Which of these taglines would get your attention?

Test hooks before you burn the budget. - Which visual feels most relevant to your job?

Match design to function, not just aesthetics. - If you saw this ad, what would you expect the product to do?

Check message clarity—can they tell what you actually offer? - What would make you click or ignore this message?

Get insight into scroll-stopping psychology. - Does this message sound trustworthy?

Trust drives conversion—don’t assume you’ve earned it.

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

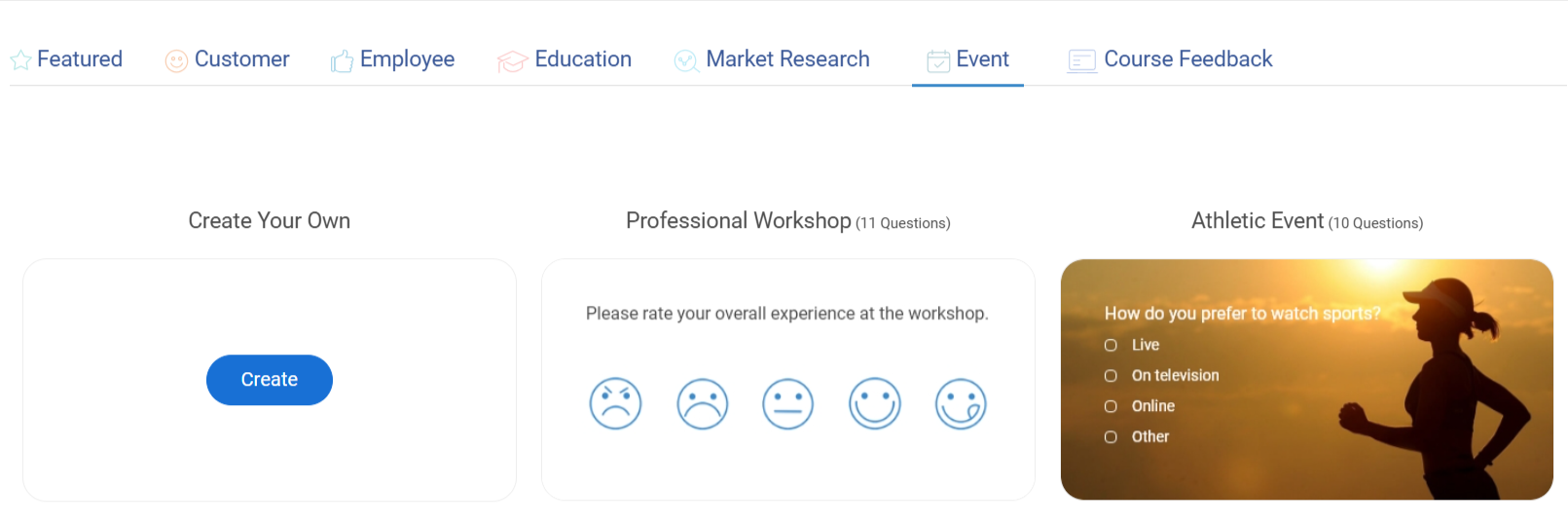

Section 5: Event Survey Question Examples

Goal: Capture feedback before, during, and after events to improve content, logistics, and attendee experience—whether you’re running a webinar, workshop, conference, or internal all-hands.

1. Pre-Event Surveys

When to use: 1–2 weeks before the event

Use this to tailor content, optimize turnout, and align with real attendee expectations.

- What are you hoping to learn or achieve from this event?

Uncover the outcomes your audience actually values. - How familiar are you with the topic/theme? (Scale: 1–5)

Calibrate content depth to match your audience’s starting point. - Which sessions, tracks, or speakers are you most excited about?

Prioritize what will drive attendance and energy. - What would make this event worth your time?

Define your success criteria based on their ROI lens. - How likely are you to attend live vs. watching the recording?

Forecast engagement and plan your follow-up strategy.

2. Post-Event Experience Feedback

When to use: Immediately after the event

Capture fresh, unfiltered reactions to understand what worked—and what didn’t.

- How would you rate the overall event experience? (1–5)

Establish a high-level satisfaction benchmark. - What was the most valuable part of the event for you?

Identify what resonated so you can scale it next time. - What didn’t meet your expectations?

Surface any disconnect between promotion and delivery. - How would you rate the logistics (timing, platform, communication)? (1–5)

Pinpoint operational friction that detracted from the experience. - Did you feel engaged during the session(s)? (Yes/No)

Validate whether your content held attention or lost it. - Would you attend another event like this from us? (Yes/No)

Measure repeat interest and future event potential.

3. Session-Level Feedback

When to use: For multi-track events or conferences, after each session

Zoom in on individual content blocks to assess performance and speaker impact.

- How useful was this session to you? (1–5)

Gauge perceived value at the session level. - How knowledgeable and engaging was the speaker? (1–5)

Evaluate speaker effectiveness beyond the slides. - Was the session length appropriate? (Too short / Just right / Too long)

Adjust the pacing for future programming. - Did this session meet its stated learning objectives? (Yes/No)

Verify whether expectations matched outcomes. - What would you change or improve about this session?

Gather precise ideas for improving relevance and flow.

4. Networking & Format Feedback

When to use: Post-event, especially for in-person, virtual, or hybrid formats

Assess the experience beyond content—how people felt and connected.

- Did you have enough opportunities to connect with other attendees?

Measure whether the event enabled meaningful connections. - Was the event format (virtual/in-person/hybrid) effective for you?

Determine if delivery matched audience preferences. - How would you rate the event platform or venue experience? (1–5)

Spot technical or environmental friction points. - What could we do to make future events more engaging?

Collect actionable ideas to improve structure, flow, or energy.

5. Post-Event Follow-Up Insights

When to use: 3–7 days after the event

Track impact beyond the event and identify opportunities for continued engagement.

- What’s one thing you’ve done or changed since the event?

Measure applied value, not just perceived value. - Are you interested in getting more resources on this topic? (Yes/No)

Segment follow-up content or nurture campaigns by intent. - Would you recommend this event to a peer or colleague?

Assess referral potential and credibility earned. - How likely are you to attend future events from us? (1–10)

Track loyalty and inform your event programming roadmap.

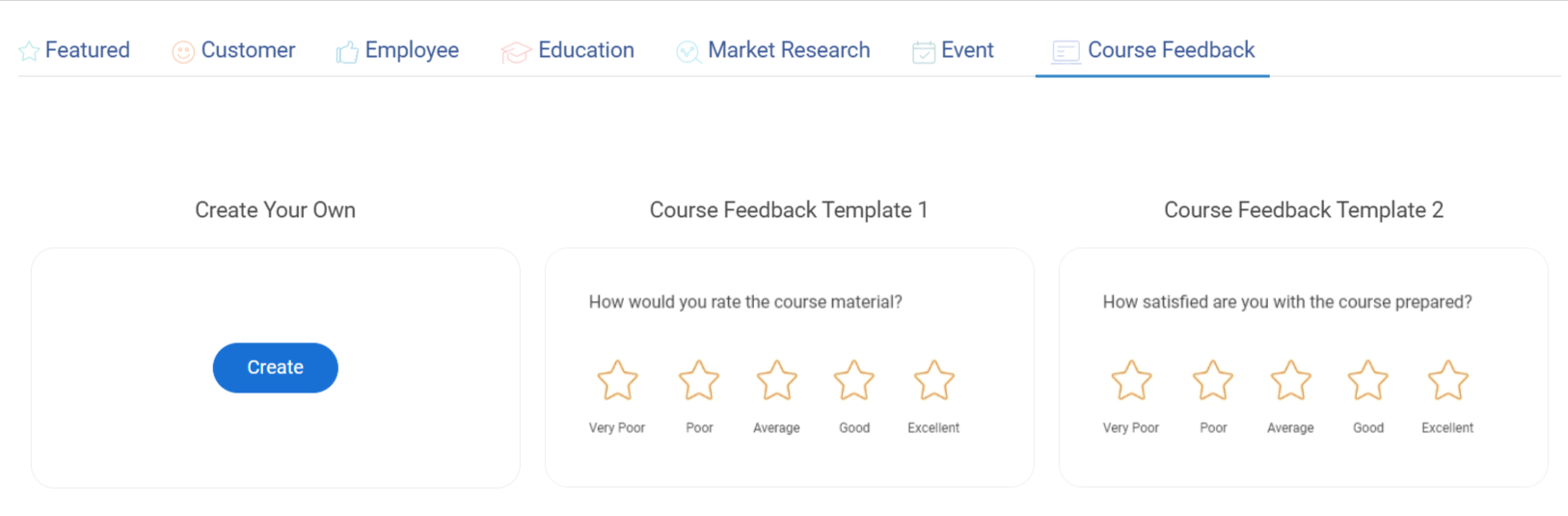

Section 6: Course Feedback Survey Question Examples

Goal: Evaluate course quality, instructional effectiveness, learner satisfaction, and retention to improve future iterations of your program.

1. Overall Course Satisfaction

When to use: At the end of a course, workshop, or program

Get a broad read on value, alignment, and experience quality.

- How satisfied are you with the course overall? (1–5)

Start with sentiment before digging deeper. - Did the course meet your expectations?

Check whether the promise aligned with the outcome. - Would you recommend this course to a colleague or peer?

Your internal NPS—no spin, just signal. - What was the most valuable part of the course?

Learn about the moments that landed well. - What was the least valuable or most frustrating part?

Find and fix friction that’s dragging value down. - How would you rate the pace of the course?

Too fast or slow, pacing shapes retention and focus.

2. Learning Outcomes & Understanding

When to use: Post-course or post-module to evaluate knowledge gained

Measure real-world confidence, not just content consumption.

- How confident are you in applying what you learned?

Confidence drives usage—track it. - Did the course help you achieve your learning goals?

Success is subjective—this makes it visible. - Which topics felt too advanced or too basic?

Learn where to dial in your difficulty curve. - What’s one thing you learned that you’re already using?

Confirm practical value, not just passive learning. - Are there any key concepts you’re still unclear about?

Use this to clean up instructional debt before scaling.

3. Course Content & Format

When to use: For both live and self-paced content

This feedback helps shape not just what is taught, but how it’s delivered.

- How would you rate the clarity of the course materials?

Clarity = confidence. Don’t leave it to chance. - Were the examples and case studies helpful?

Contextual learning sticks better—test if it landed. - Was the course too long, too short, or just right?

Goldilocks feedback helps tune length to learning goals. - Were interactive elements (quizzes, polls, assignments) effective?

See if active learning elements are doing their job. - Did the content align with what was advertised?

Expectation gaps kill trust. Check for them.

4. Instructor or Facilitator Evaluation

When to use: After instructor-led sessions or video modules

Strong instruction makes or breaks experience, even with great content.

- How clearly did the instructor explain the material?

Clear teaching = smooth comprehension. - Was the instructor engaging and easy to follow?

Engagement drives energy and focus. - Did the instructor encourage participation and questions?

Psychological safety improves learning outcomes. - How knowledgeable did the instructor seem?

Credibility influences trust and retention. - What would improve the instructor’s delivery?

Give them a way to level up, not just be rated.

5. Accessibility & Usability

When to use: To evaluate online course platforms or tools

If the platform experience is broken, learning breaks down too.

- Was the platform easy to use and navigate?

Friction here directly impacts completion rates. - Did you face any technical issues while accessing the content?

Hidden issues often go unreported—ask directly. - Was the course accessible on your preferred device?

Learners expect flexibility—this confirms it. - Were audio, video, and text materials clear and of high quality?

Low fidelity means lower engagement. - Did you receive adequate support if something went wrong?

Support is part of the experience, not a side note.

6. Mid-Course Check-Ins

When to use: In longer programs or multi-module tracks

Catch disengagement early, before it turns into drop-off.

- How is the course going for you so far?

Invite honest, low-pressure feedback midstream. - Are you keeping up with the pace and workload?

This helps spot potential bottlenecks or overloads. - What would help you get more out of the course?

Get ideas on how to improve the experience in real time. - Have you applied any course learnings yet?

Application is the goal—this checks for it early. - What would you like to see more or less of in the remaining modules?

Let learners shape the rest of the journey.

7. Certification & Assessment Feedback

When to use: For skill-based or credentialed training

Ensure your certification adds value, not just a badge.

- Were the assessments aligned with the course content?

Avoid testing for the sake of it—make sure it fits. - Was the passing criteria clearly explained?

Clarity here avoids confusion and distrust. - Did the certification feel valuable and relevant?

Perceived value matters, especially for professional growth. - How challenging was the final assessment?

Gauge whether it tested mastery or just recall. - Would you pursue additional certifications from us?

This is your trust check—and your upsell signal.

Types of Survey Questions (& When to Use Them)

Not all questions pull the same weight. The format you choose shapes the kind of answers you get—and whether you can actually do something with them.

Here’s what to use, when, and why it matters:

- Multiple Choice

Use when you need structured, fast-to-analyze answers.

Ideal for workflows, tool usage, and segmentation. Keeps the data clean—and the decision tree clear. - Likert Scale (1–5 or 1–10)

Use when you’re measuring sentiment, satisfaction, or confidence.

Think CSAT, NPS, and feature value. Label every point. Don’t guess at what a “3” means. - Open-Ended

Use when you want the story behind the score.

One well-placed text box can give you roadmap gold—but only if you plan to read it. - Ranking

Use when you need to force tradeoffs.

Great for prioritizing benefits, features, or messaging. Reveals what really matters when everything can’t be #1. - Matrix/Grid

Use when you’re evaluating multiple items on the same axis.

Efficient for session feedback or feature comparisons—but keep it simple. Grids get overwhelming fast.

Use fewer formats, not more. Start with closed questions for clarity. Layer in open text for insight. And always ask: Will I actually use this answer to make a decision?

Mapping Survey Questions to Business Goals

A survey isn’t useful unless it leads to action.

- Each question is a lever.

- Each response is a roadmap.

- Each follow-up is where the real work begins.

This table shows you exactly how to turn responses into roadmaps, manager coaching, retention plays, and messaging updates. These are not insights for later—they’re instructions for what to do now.

| If your goal is… | Ask this question… | Do this next |

|---|---|---|

| Stop churn before it compounds | What’s the primary reason you decided to cancel? | Tag responses by theme (price, onboarding, missing features). Build a "Churn Playbook" and fix the top 2 drivers in the next 30 days. |

| Fix what’s broken in onboarding | Did you feel confident using the product after your first week? | Any "No" = follow-up via CS within 24h. Rewrite docs or screens flagged 3+ times. |

| Make support a retention driver, not a liability | Was your issue resolved to your satisfaction? | If “No,” trigger a manual follow-up. Track rep performance and retrain based on repeat patterns. |

| Refocus your product roadmap | What feature do you wish we had? | Group responses by use case. Ship a roadmap update that hits 1–2 high-frequency themes. |

| Validate real product-market fit | How disappointed would you be if you could no longer use our product? | If fewer than 40% say “very disappointed,” pause marketing. Interview power users. Reposition around what they value. |

| Make every training session worth the time | Did the course help you perform your job better? | Low scores? Archive or redesign the module. Survey again 30 days post-refresh. |

| Level up team leads | Do you receive regular and helpful feedback from your manager? | Score |

| Catch quiet disengagement | How likely are you to stay at the company over the next year? | Flag responses under 7. Do 1:1s within the week. Look for patterns in team or tenure. |

| Drive post-event conversion | Would you like to be contacted with related resources? | Tag "Yes" as warm leads. Trigger demo invites within 48 hours. Send tailored content based on the session attended. |

| Tighten your GTM messaging | What motivated you to take this course? | Use top answers as email subject lines and ad copy. Rewrite your value prop to reflect real pull. |

| Clean up confusing UX | What part of the product was most confusing? | If mentioned by 3+ people, record a Loom walkthrough or build a tooltip. Ship by the end of the sprint. |

| Double down on what worked in your event | What was the most valuable part of the event? | Feature top-voted sessions as on-demand content. Use insights to pitch speakers for next time. |

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

Best Practices for Writing Survey Questions

You don’t need a PhD to write a good survey, but you do need to avoid the mistakes that make 80% of them useless. These best practices come from product teams, HR leads, CX pros, and researchers who’ve run hundreds of surveys—and fixed just as many broken ones.

If you follow just these 7 rules, you’ll be in the top 10% of survey designers.

1. Write Like a Human, Not a Research Robot

- Say: “What frustrated you the most?”

- Don’t say: “Please indicate the level of dissatisfaction experienced relative to service delivery.”

Pro Tip: Use plain English. Grade 6 reading level. No jargon.

2. Don’t Lead the Witness

- Say: “How would you rate the feature?”

- Don’t say: “How great did you find the feature?”

Pro Tip: Keep phrasing neutral. Avoid assuming positive sentiment.

3. One Question = One Idea

- Say: “Was the content clear?”

- Don’t say: “Was the content clear and engaging?”

Pro Tip: Double-barreled questions confuse people and produce junk data.

4. Use Balanced, Labeled Scales

- Use: 1 (Very Unsatisfied) to 5 (Very Satisfied)

- Don’t use: 1, 2, 3, 4, 5 with no labels—or a skewed scale like “Good to Excellent”

Pro Tip: Every point on your scale should mean something. Keep it symmetric.

5. Keep Surveys Short—But Not Stupid

- Under 10 questions = more completions

- Mix closed + 1–2 optional open-ended questions.

- Use logic to skip irrelevant questions.

Pro Tip: Respect their time. Only ask for what you’ll use.

6. Place Open-Ended Questions Strategically

- End of section or survey

- Never lead with them

- Only include if you plan to read them

Pro Tip: One good open-text question is worth more than five bad ones.

7. Test Your Survey Like You Would Test a Product

- Preview every logic path.

- Run a 5-person dry test

- Ask, “Can someone answer this clearly in <10 seconds?”

Pro Tip: If your own team gets confused, your audience will bounce.

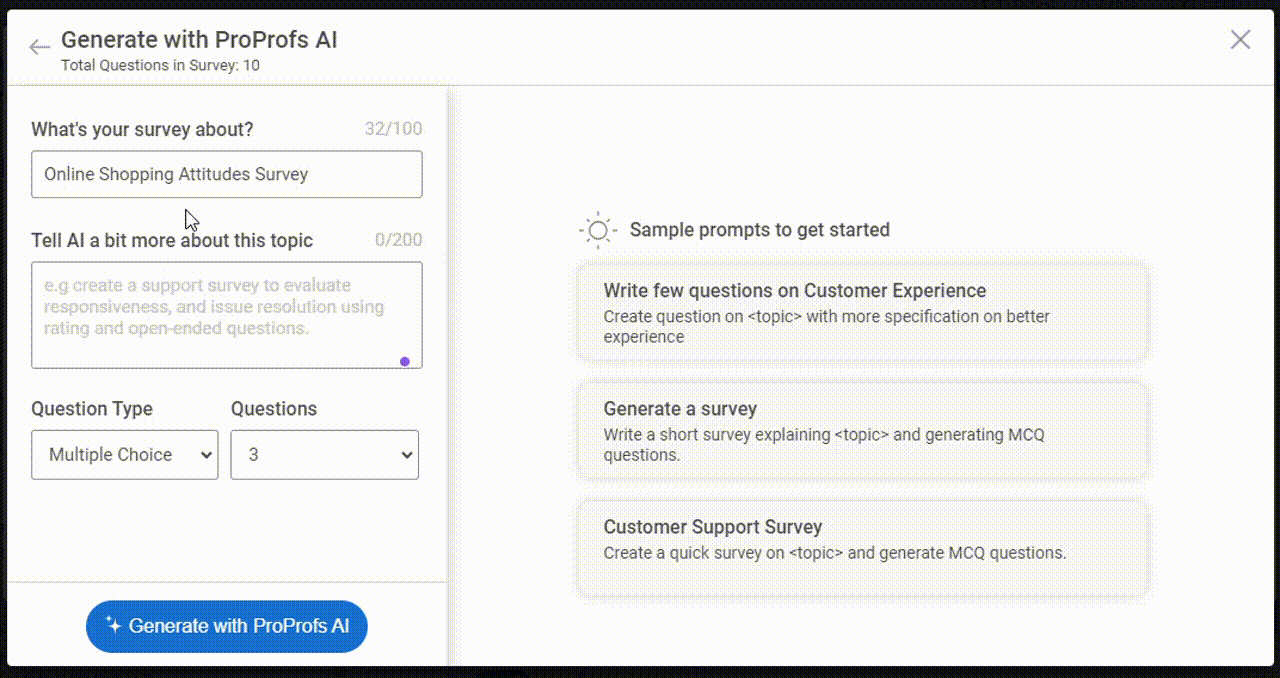

Feeling like all of this is too much to take care of? No worries. Tools like ProProfs Survey Maker have got your back! The AI feature can quickly generate survey questions for you that are most relevant to your research goal.

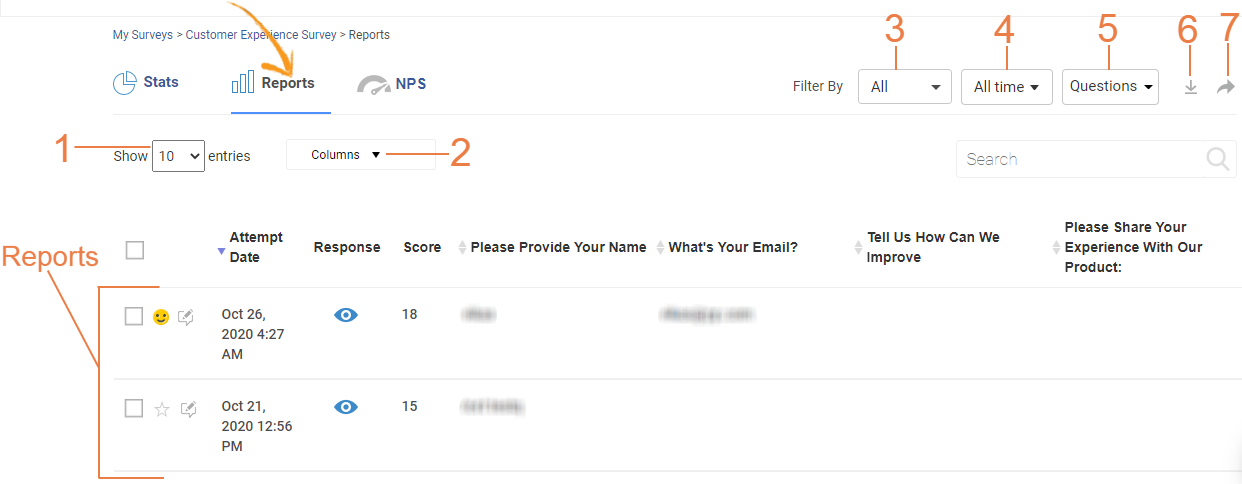

What to Do With Survey Results

Collecting responses isn’t the win. Doing something with them is. Let me show you how to turn raw survey data into action—fast, whether you’re in HR, product, CX, or marketing.

1. Start by Filtering Responses by Segment

Don’t look at averages—look at patterns by:

- Department (for HR survey questions)

- Role or seniority

- Plan type or lifecycle stage (for customers)

- Geography, channel, attendance (for events)

Use tagging, filters, or spreadsheet pivot tables. Tools like ProProfs Survey Maker let you do this in-platform.

2. Identify the Patterns, Not the Outliers

Don’t chase every comment. Look for:

- Repeated phrases in open-text fields

- Low scores clustered around a feature, team, or topic

- Drop-off points across lifecycle moments (e.g., onboarding, support)

Create a quick “response clustering” doc. If 4+ people say the same thing—act on it.

3. Share the Results Internally

Transparency builds trust. Even if the feedback is tough:

- For employee surveys: summarize themes and publish next steps in your all-hands

- For software survey questions: brief the product, support, and marketing teams

Turn feedback into a “What We Heard → What We’re Doing” format.

4. Close the Loop with Respondents

Especially for employees and power users:

- Send a “Thank you—here’s what we’re doing about it” email

- Highlight changes that came directly from feedback

- Offer follow-up options (e.g., “Want to dig deeper? Join our research group”)

Closing the loop = higher trust, better response rates next time.

5. Build a Feedback → Action → Learn Loop

The goal isn’t just to listen once—it’s to learn faster than your competitors or bottlenecks.

Set a recurring system:

- Quarterly feedback themes

- Monthly action reviews

- Continuous testing of solutions

Make surveys part of your product, culture, or training loop—not an isolated project.

You Don’t Need More Feedback—You Need Better Questions

Surveys aren’t admin tasks. They are your decision infrastructure.

This guide gave you 250+ proven questions across CX, employee engagement, training, events, and more—plus the strategy behind when, why, and how to use them.

If you’re done operating on gut feel, start getting real signal. With ProProfs Survey Maker, you can launch any of these surveys in minutes—no setup lag, no friction. Just clarity.

Better questions create better companies. Start asking!

Frequently Asked Questions

2. What are the best survey questions to get honest employee feedback?

Ask about safety, communication, and purpose, not just surface-level satisfaction. Examples:

- “Do you feel comfortable speaking up at work?”

- “Do you understand how your work contributes to the company’s goals?”

Anonymous surveys and neutral phrasing increase honesty.

3. How do I make sure my survey questions aren’t biased or leading?

- Avoid assumptions (“How great was your experience?”)

- Use a neutral tone (“How would you rate your experience?”)

- Balance scale options (equal positives and negatives)

- Don’t double up questions (“Was it clear and engaging?” → Split it.)

4. What’s the ideal number of questions for a survey?

Aim for:

- 5–7 questions for quick pulses

- 10–15 for employee or product feedback

- Under 5 minutes total

Always show progress bars and skip logic if possible. Check this guide for in-depth details.

5. How do I write questions that align with my business goals?

Start by asking:

- What decision will this answer inform?

- Then write the question backward from that

Example: Want to reduce churn? Ask: “What made you consider canceling?”

6. How do I get more responses to my survey?

- Keep it short

- Be clear about the time commitment

- Explain how results will be used

- Offer small incentives (vouchers can increase response rate by 7%)

- Use reminders and optimized timing

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!

We'd love your feedback!

We'd love your feedback!

Thanks for your feedback!

Thanks for your feedback!