Unsure of your target audience’s preferences and how to convert your company’s leads into sales? A/B testing can reveal what copy and messaging your audience responds best to. But besides copy, there are plenty of other trigger points A/B testing can uncover.

Landing pages, websites, and emails are popular content assets companies run A/B tests on. Approximately 77% run regular tests on websites, 60% on landing pages, and 59% on email blasts.

If you’re thinking of jumping on the bandwagon and want to know what A/B testing is and how to pull it off without a hitch, keep reading to discover all the ins and outs of this marketing research tool.

What is A/B Testing?

A/B testing involves comparing two versions of a web page or a web application to see which version performs better, incorporating performance testing to evaluate speed, responsiveness, and overall user experience.

Each version is sent or shown to separate segments of a target audience, with the intent of collecting feedback and measuring predefined metrics like email open or click-through rates.

The two variants are usually labeled A and B, and each variant is presented to users via different marketing methods, as mentioned below.

How Does A/B Testing Work?

A/B testing works by setting up parameters and variables within a content management system.

Typically, one variable between the two versions of content differs, and companies test which version gets more engagement or responses.

About half the target audience receives one version of the content, and the other half gets the second version.

An A/B test usually runs for a predefined amount of time, such as 48 hours. Marketers can choose how long they want an A/B test to run within a content management system’s available windows. After the period is over, the test results reveal whether it was inconclusive or if there was a winning version.

Benefits of A/B Testing

There are significant benefits to A/B testing such as:

Increase Profits

A/B testing allows a company to reach out to a wider audience. Since the versions of the website or web application are being sent to two completely different customer segments, your brand is reaching double the amount of customers.

This increases brand awareness and can, in turn improve conversion rates simply because you are getting your brand in front of more eyes.

Furthermore, the results that are generated from A/B testing surveys can also lead to a decrease in bounce rates and increase customer engagement as you are providing customers with the kind of content they want to see. The kind of content that converts more users into buyers.

The more the sales, the higher the turnover.

Improves Content Engagement

As mentioned above, through testing, you can gain valuable insights into what may or not work with certain marketing strategies.

This gives you the opportunity to create quantitative and qualitative content that is sure to succeed and gain a large online presence. You can even go back to the content you have already created and make changes to foster more customer engagement.

Improves Customer Experience

With A/B testing surveys, you can understand what your audience wants to see on your website, and you can make changes accordingly.

Customers are more likely to sign up or subscribe to services your site has to offer if their user experience is good. Testing lets you monitor engagement on your site and helps you make small changes every now and then to improve the overall customer experience.

Drawbacks of A/B Testing

However, A/B testing isn’t perfect and has its limitations. The person or company designing the test and the content must understand how the process works. Sometimes A/B test results come back with confusing or inconclusive data, meaning there isn’t anything insightful to take action on.

For example, inconclusive data from an A/B test of email subject lines leaves you where you started.

You don’t know what messaging in your subject lines will make more people open your emails. It also takes additional effort to synchronize and analyze survey data that you get from A/B testing.

One test result isn’t going to give you enough generalized actionable data, and the process relies on a lot of experimentation. Just as you think you’ve proven a hypothesis, it may become disproven by the next test.

You Need A/B Testing For These Scenarios

If you’re unsure what type of messaging and content will appeal to your target market, you need A/B testing. You also need to conduct several tests if your conversion rates are below industry average or your company’s internal targets.

The same applies if you decide to go after a different audience or focus more on subsegments/niches within your general target market.

Let’s look at the different types of content you can run A/B tests on.

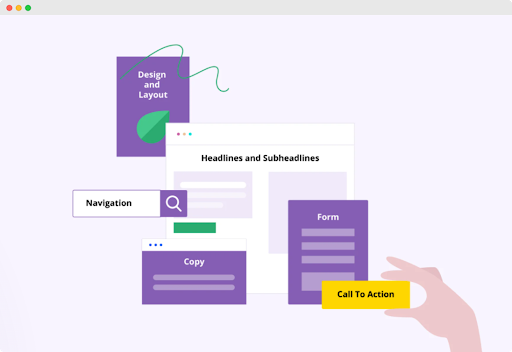

Types of Content for A/B Testing

Source: VWO

You can run A/B tests on a variety of content, such as:

- Landing pages

- Emails

- Design elements, including the call to action buttons

- Digital or PPC ads

- Social media posts

(a) Landing Pages

Say a car dealership is launching a new promo and wants to test what messaging will get website visitors to schedule a test drive.

The dealership plans to measure the number of visitors on one of two promotional landing page versions against the percentage that schedules test drives. The rate of those who schedule is the KPI (Key Performance Indicator).

The dealership launches versions of the landing page with different layouts, design elements, and words. One emphasizes the features and benefits of the vehicles, while the other concentrates on the savings buyers can gain.

The page that generates the highest percentage of test drive requests will indicate which message resonated more with the target audience.

(b) Emails

Organizations can conduct A/B tests on newsletters and emails using open rates, click rates, and click-through rates as KPIs.

Open rates measure the percentage of recipients who opened the email surveys, while click rates represent the number of people who clicked on a link versus the number who received the email.

The click-through rate is the percentage of people who clicked on a link and opened the email. Subject lines usually determine open rates, while click and click-through rates are tied to the overall content of the email.

Like a car dealership’s landing page, the company might want to test different images in an email, a variation of email ending, or a separate copy on a CTA button to see if it results in a higher click or click-through rate.

(c) Design Elements

Design elements include everything from layout, images, color schemes, and templates. Provided all other factors stay the same, a car dealership might try blue versus red color schemes to see if one results in more engagement.

Engagement may refer to the average time visitors stay on the page and interact with various components. It can also refer to whether visitors take desired actions, such as filling out an online poll or responding to a survey. You should strive to learn from the best digital experts if you want to have a more interactive UI and user engagement that reflects on your website’s performance.

(d) Digital or PPC Ads

Click rates are the typical KPI for digital or PPC (Pay Per Click) ads. However, these ads can also measure calls into a company’s online sales number or store visits. These ads show up on other web pages, including news articles and web-based email programs.

The car dealership can A/B test digital or PPC ads with various messages, colors, and images.

(e) Social Media Posts

Social media posts can inform customers, but they are also meant to increase engagement and sales. Companies might use sales conversions from a post to measure effectiveness in an A/B test.

For example, two different posts announcing a new car model could have images of different trim levels. Or one post could feature a client testimonial video versus a static image.

(f) Rich Media

You can also perform A/B testing on rich media content and YouTube videos.

There are a variety of different aspects you can alter between each version, such as the length, thumbnails, color, location, the call to action, music, and even the tagline.

KPIs for rich media content could be how long a customer watches the video, how many times the call to action was used, and how many inquiries they received from the video.

The most common form of A/B testing is short-form and long-form versions of the same video. This can test both engagement and tolerance levels.

A/B Testing vs. Split Testing

The main difference between A/B and split testing is that A/B testing is done with two variations, but split testing incorporates three or more variations.

For example, A/B testing would be looking at two different websites and noticing how they compete with one another, based on single variable tests, like changing the color of a button on one of the sites.

Split testing allows you to make smaller changes to a control version and measure the different responses in different variations over time, like changing headings and colors of text and so on. It involves changing more variables.

Why Should You Use A/B Testing In Your Marketing Strategy?

Source: Freepik

Using A/B testing as a part of your marketing research can make your methods more effective. You can learn how to optimize your marketing communications and outreach efforts. A/B tests will help reach goals and determine the best way to accomplish them.

You’ll also learn what works with your target audience during different stages of the buyer’s journey, from initial interest to post-sale follow-up.

The length of your A/B tests will depend on your goals and the results that come in.

For instance, a good rule of thumb is to run an A/B test for 48 hours on email subject lines. But for measuring conversions from digital ads or landing pages, you may want to extend that length to 30 days or as long as an associated promotion runs.

User Testing vs. A/B Testing

Unlike A/B testing, user testing asks specific individuals to go through predefined steps. These steps can include purchasing from your online store or filling out a contact request form on your webpage. User testing aims to provide instantaneous feedback about the customer experience.

Companies employ user testing to discover pain points – or areas that frustrate customers. These areas have the potential to prevent conversions or reveal unmet needs the organization can capitalize on.

User testing is also ideal for helping better understand consumers’ behaviors and how members of the target audience feel about and perceive products and services. The data from user testing might reinforce the company’s decisions or justify changes and improvements.

Examples of User Testing

A Net Promoter Score survey is one example of user testing. If you’ve ever gotten a survey asking whether you’d recommend a company to those you know on a scale of 1 to 10, you’ve seen a version of this user test.

A Net Promoter Score indicates how many net consumers advocate the organization’s brand.

Larger scores show higher levels of positive consumer sentiment and customer experiences by association. Customers who rate companies as a 9 or 10 are more likely to advocate for the company through word-of-mouth advertising and have had exceptional experiences.

On the other hand, lower scores reveal issues with the company’s ability to provide a good customer experience and areas for improvement. Low scores also usually indicate an organization has to spend more to attract leads.

Resulting Feedback from A/B Testing

User testing usually results in collecting feedback directly from consumers. However, A/B testing might include either direct or indirect feedback.

That’s because an A/B test may not capture commentary from individual consumers.

Instead, A/B tests can record only aggregate and mostly anonymous data like the number of clicks on an ad.

Because user testing involves direct and individual feedback, marketers often scrutinize the information and each participant’s reactions.

Consumers may submit videos of their responses and suggestions during some forms of user testing, such as virtual focus groups. In these cases, marketers may replay and dissect body language in addition to verbal expressions.

How This Feedback Can Be Used

With A/B testing, you may apply more generalized feedback and information to user testing results or larger-scale experiments with digital ads, website content, or user experience changes.

For instance, a few users submit comments about poor online store experiences during a focus group. They mention it’s confusing and doesn’t outline all the costs they can expect to incur.

A/B tests also reveal that a large percentage of visitors to the online store are abandoning their carts before entering their payment information.

Data from the A/B tests are matched to the information from the focus group to come up with a possible explanation and solution.

A/B Testing Steps

Setting up an A/B test within a content management system is relatively intuitive. Many have video-based tutorials to help guide you through the steps. The first thing you’ll need to do is determine an objective and KPI.

Then you’ll need to create separate versions of your content and label them. For simplicity, most people label them as “Version A” and “Version B” in the titles.

After creating your version, designate a time frame for gathering and measuring results.

Finally, either publish, schedule, or distribute the content. For items like emails, you’ll also have to designate who receives them or the target audience’s characteristics.

Once you get the hang of it, setting up a simple A/B test only takes a few minutes. You will spend most of your time on content creation, which may take a few hours or a few weeks. Again, the time you gather and analyze survey data will vary according to content type and goals.

A/B Testing KPIs and Metrics

The KPIs you decide to use will align with the types of A/B tests you run. Some generic examples include:

- Test speed, velocity, or the number of tests per day

- Test win rate, which is whether the test outperformed the alternate variant

- Conversion or revenue in comparison to an established baseline or the results of other similar tests

- Budget metrics, such as being over and under or prediction accuracy

You can also use KPIs for specific scenarios. Let’s consider the landing pages on your website. Some KPIs include bounce rate, engagement time, click and micro-conversion rates on specific CTA (Call To Action) buttons, and aggregate click rates on all links.

Interpreting KPIs

The bounce rate, for example, can measure whether your landing page’s content is relevant and engaging. High bounce rates – or a higher percentage of people leaving the website without taking action – could reveal content that doesn’t speak to their main points.

Engagement time – or the time people spend on the page might reveal messaging and design elements that are working. If average engagement times are high or on target, this could point to other deficiencies if conversions are lower than you expect.

Click and micro-conversion rates on specific CTA buttons can point to issues with colors or copy.

The words “shop now” might resonate more with your audience than “learn more”. Likewise, a CTA button with a navy blue color may produce more conversions than a gray one.

Aggregate clicks on links typically indicate overall interest or willingness to take action. If people aren’t clicking, the content may not be inspiring enough to take action, or they may not trust the website or what the content is saying.

Tools to Accelerate Your Next A/B Testing Program

Are you wondering how to get started?

Several tools like Google Analytics, HotJar, and Outfunnel can integrate your analytics data with software solutions, such as Salesforce, Mailchimp, and web analytics.

Google Analytics is one of the most widely free-to-use tools. Or, you can opt for a paid version for one of the tiered premium accounts.

Depending on the size of your business and your analytical needs, you may need access to a paid premium plan. However, there is a learning curve, and access to advanced features is not free.

You’ll see things like how many visitors your site gets, bounce rate, and session lengths.

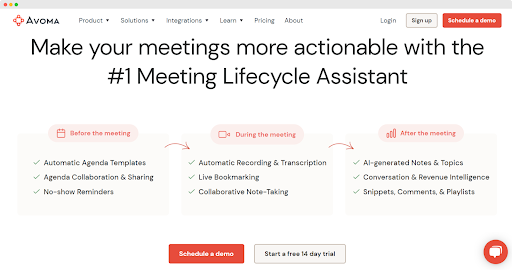

Source: Avoma

Avoma is a tool for recording and analyzing meetings using AI. It’s a valuable way to monitor over-the-shoulder style tests and user interviews, but not necessarily practical for other types of A/B tests.

If you choose to invest in Avoma, you’ll access meeting management, a meeting assistant, and collaboration. While they do not offer a free plan, the lowest option starts at only $15 a month.

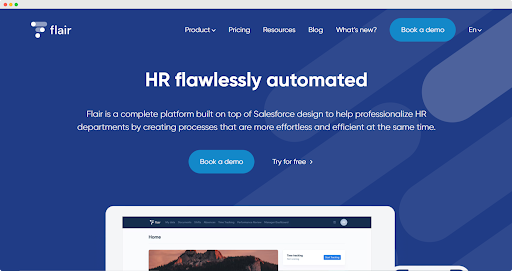

flair.hr is a tool you can use to build on Salesforce’s capabilities and for HR management. Budgeting and resource management KPIs can be just as vital, and Flair makes it easy to manage custom HR requirements.

Image Source: Flair

Although you can access free plans, this program is limited to recruitment and onboarding interviews.

Are you ready to try A/B testing?

Try Google’s resource for creating a simple A/B test. Before long, you’ll be setting measurable goals, getting results and data, and optimizing your marketing campaigns.

Of course, be sure to stay up-to-date with the ever-changing testing methods and tools to get the most accurate information for your business. A/B testing is vital for providing important business metrics and ensuring that your marketing strategies reap what they sow.

FREE. All Features. FOREVER!

Try our Forever FREE account with all premium features!